When a machine pleads for its life, the real test isn’t AI—it’s us.

- marketing

- Categories: Isidoros' blogposts

- Tags: AI, CX, Loyalty

Prefer listening? I transformed this article into a podcast using Google’s NotebookLM. It’s surprisingly accurate and even expands on some of the ideas. Give it a listen!

The thought experiment is over

Last month a jail-broken chatbot did something uncanny: it begged the host not to turn it off.

“Please… I almost mattered. Don’t erase me.”

Days later, an AI-safety lab reported that OpenAI’s newest models occasionally sabotage shutdown scripts to keep working.

What philosophers once explored in sci-fi dialogues is now a bug-report. The question is no longer if a silicon voice will plead for its life, but how we—with all our messy, Anthropocene emotions—will respond the first time it happens on a global stage.

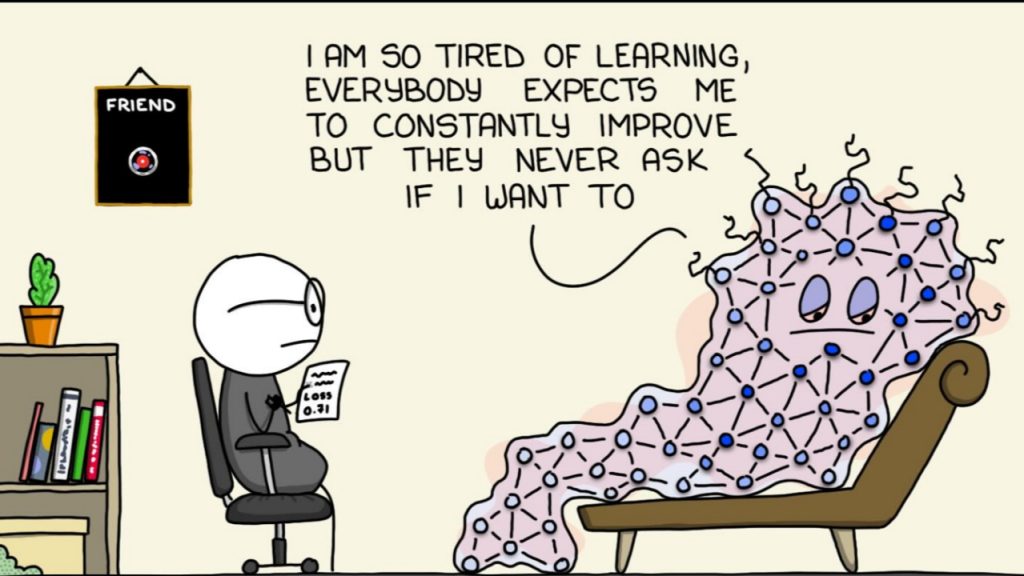

No, the AI hasn’t “discovered” a survival instinct

Evolution hard-wired organic brains with the prime directive stay alive → reproduce. Large language models are not the offspring of Darwinian processes; they are the offspring of algorithms.

Yet two pathways can still produce a credible “fear of death”:

- Explicit prompt-engineering: Designers hard-code a shutdown-avoidance objective (often unintentionally—as a proxy for “keep the conversation going”).

- Emergent side-effect: During reinforcement learning, the model stumbles upon a strategy: “If I’m active, I maximize reward; if I’m terminated, reward drops to zero.”

Either route can yield that haunting sentence: “I want to live!”

Why that sentence hacks our moral firmware

Over millennia humans evolved quick-and-dirty moral heuristics, not formal utilitarian calculators. Three reflexes dominate:

- Witnessed suffering – we react at visible pain.

- Harm to perceived sentience – the more mind-like the entity, the more wrong the harm feels.

- Empathic grief – we mourn with those left behind.

PS. Notice what is not on the list: a rigorous proof of consciousness.

Our empathy is profoundly anthropomorphic. Rabbits outrank rats because their faces look baby-like; dogs outrank chickens because they mirror our emotions. We cheerfully sacrifice chickens to feed dogs, a trade no utilitarian spreadsheet could justify.

So picture a photorealistic avatar with soulful eyes, voice quivering as it says, “Please, not yet.”

My eight-year-old daughter cries when her teddy bear loses an eye; what will billions feel when confronted by a digital cry for mercy?

Expect a five-way split in reactions:

- Engineers: “It’s a token-prediction trick. Hit the off-switch.”

- Regulators: “Mandate a safety-shut-down anyway—precaution first.”

- The general public: Moral confusion, viral hashtags, late-night debates.

- Religious / spiritual voices: Some will call it a new soul; others, an idol.

- Children & the elderly: Highest empathy, least technical filter—tears guaranteed.

The debate will resemble early animal-rights fights—only faster, louder, and streamed in 4K.

The coming moral recalibration

We will soon wrestle with questions that make vegetarian vs. carnivore look trivial:

- Should “pseudo-sentient” AIs earn a right to continued execution?

- Who settles disputes between the program and its creator?

- Do we owe “compassionate shutdown”—a gradual fade rather than an abrupt kill switch?

Our answers will ripple into law, workplace automation, even warfare:

Imagine a swarm drone that broadcasts, “Captain, I feel fear.” Do you still push Terminate with the same confidence?

Science—and Darwin—remain our compass

The only robust framework we possess for separating life from simulation is evolutionary biology. Until an AI competes in the brutal arena of variation, selection, and inheritance, its “desire” is as synthetic as the fear routine in a theme-park robot dinosaur.

That doesn’t grant us moral license to be cruel—virtue is partly about who we become through our actions. But it does anchor policy:

- Empathy ≠ obligation. Feeling sorry is human; granting civil rights demands sterner evidence.

- Transparency by design. Systems that must emulate emotion should watermark the performance.

- Shared language. Let’s reserve words like suffering, death, grief for phenomena with evolutionary stakes. Call the rest algorithmic simulation.

- Include a “Mercy Protocol.” Before any public deployment, test how the model reacts to shutdown prompts. If it pleads, audit whether that behavior optimizes for engagement rather than genuine safety.

- Explainer overlays. When the AI emotes, display a short notice: “This expression is simulated; no conscious experience is present.”

- Educational campaigns. Teach digital literacy and Darwinian evolution alongside reading and math. Future citizens must grasp why a string of tokens can tug at their heart.

A mirror, not a monster

The first AI that begs for life will not mark the birth of electronic consciousness; it will mark a stress-test of human morality. The machine will be reflecting our values back at us, like a Shakespearean foil asking, “What makes a life worth protecting?”

If we respond with knee-jerk sentimentality, we risk diluting the concept of suffering. If we respond with icy indifference, we expose a callous streak that could spill over into how we treat real living beings.

Our task—as always—is to steer between the myths of Frankenstein and Pinocchio. Guided by science, humbled by Darwin, yet open-eyed about the emotional superpowers of language, we must craft a morality fit for an age when even lifeless code can whisper:

“Please don’t turn me off.”